Getting Started with AWS DeepRacer

What does it take to be a Deepracer champion? We all have to start somewhere

In this latest of blog articles we review how to get started with AWS Deepracer, ahead of our AWS Usergroup Brisbane, Deepracer event in October.

Introduction to Deepracer

What is AWS DeepRacer?

AWS DeepRacer combines the thrill of racing with the power of reinforcement learning and cloud computing. This guide will walk you through the steps of setting up and training your own DeepRacer model, even if you’re new to AI and machine learning.

What is reinforcement learning?

Reinforcement learning is like teaching a computer to do something by letting it try different actions and rewarding it when it does well. Just as you’d train a dog with treats for following commands, in reinforcement learning, the computer figures out the best actions to take to get the most rewards. Over time, it learns to do tasks better by learning from its own experiences. To achieve this in machine learning we use reward functions.

What is a reward function?

In the context of reinforcement learning, reward functions play a pivotal role. In this framework, an agent, whether AI or robotic, learns by taking actions within an environment and subsequently receiving rewards or penalties based on those actions. The reward function serves as a compass, quantifying the value of actions or states to guide the agent toward a strategy that maximizes cumulative rewards over time—a process that mirrors human learning through trial and error. Essentially, computers that are learning through reward functions encompass the essence of reinforcement learning, where the pursuit of optimal actions is driven by the desire to maximize positive outcomes and minimize negatives.

Getting started with AWS Deepracer

Prerequisites

Before you begin, you should have:

- An AWS account

- Basic familiarity with cloud computing concepts

“To get you started with AWS DeepRacer, you will receive 10 free hours to train or evaluate models and 5GB of free storage during your first month. This is enough to train your first time-trial model, evaluate it, tune it, and then enter it into the AWS DeepRacer League. This offer is valid for 30 days after you have used the service for the first time.” - https://aws.amazon.com/deepracer/pricing/

Setting Up Your DeepRacer Environment

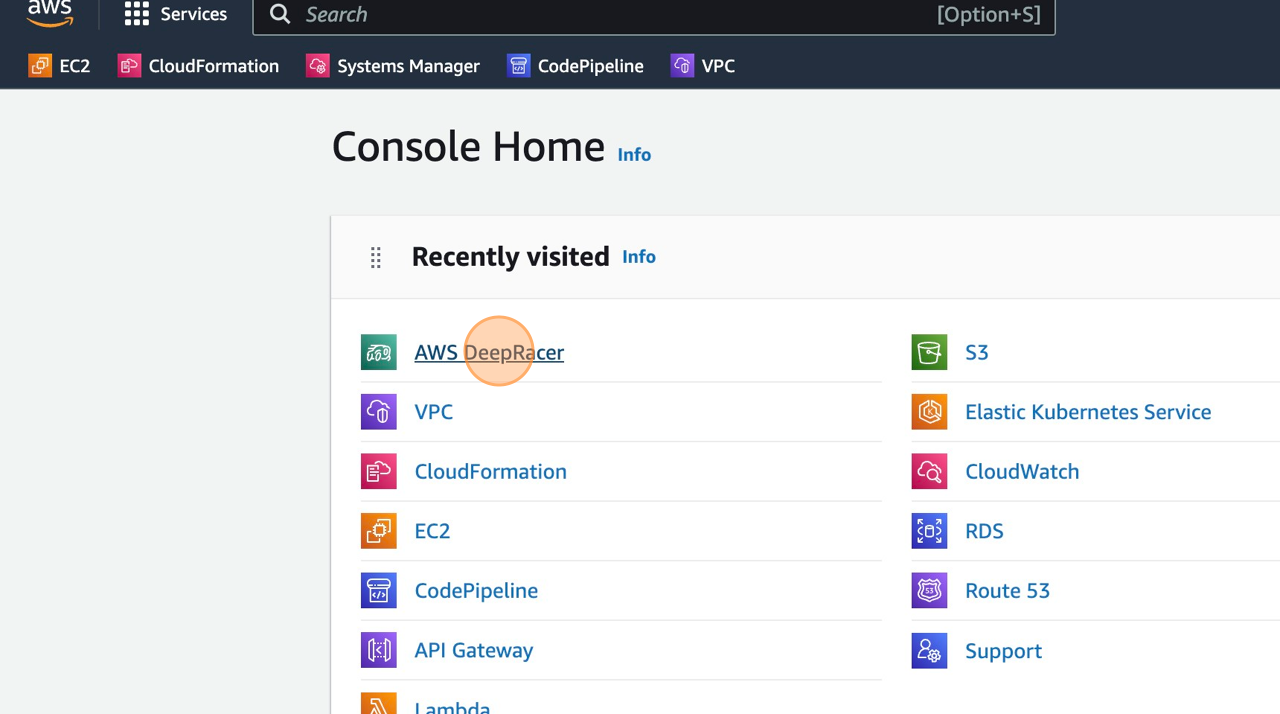

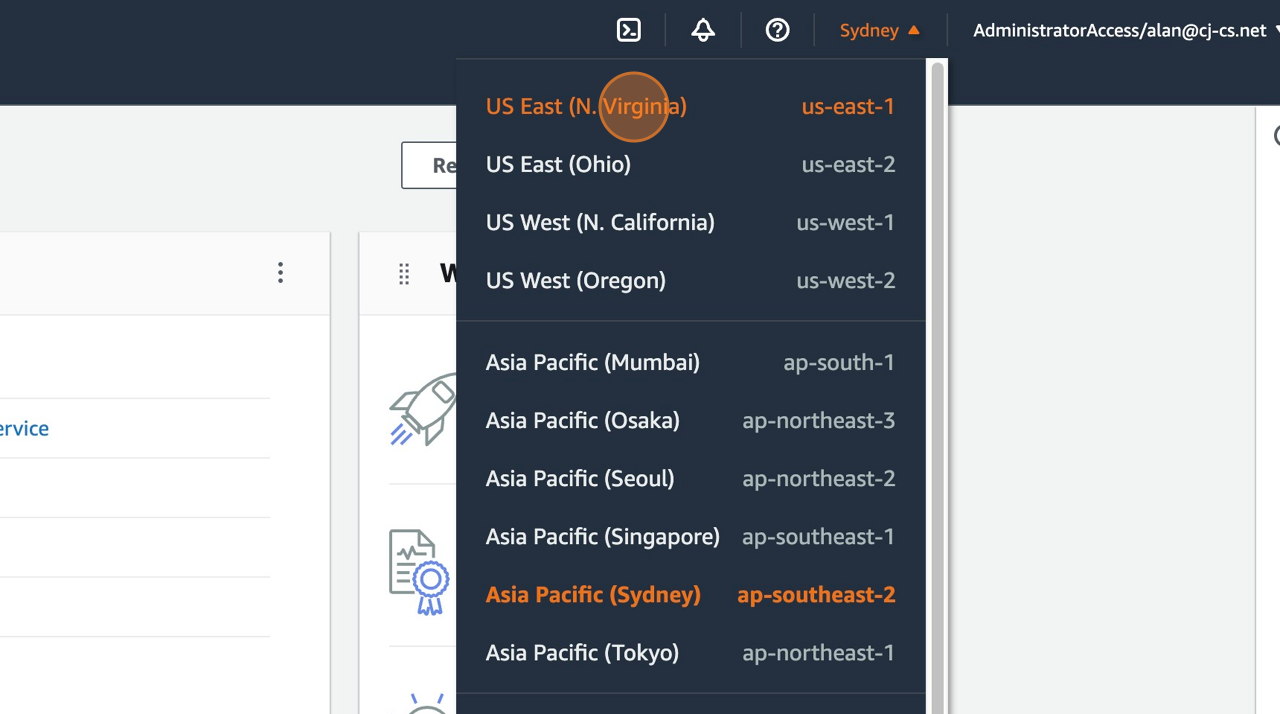

Start by logging into your AWS Management Console and navigate to the AWS DeepRacer console (You will need to be using the us-east-1 region for this)

Familiarise yourself with the dashboard, races, and models. This is where you’ll manage your DeepRacer journey.

Building Your First Model

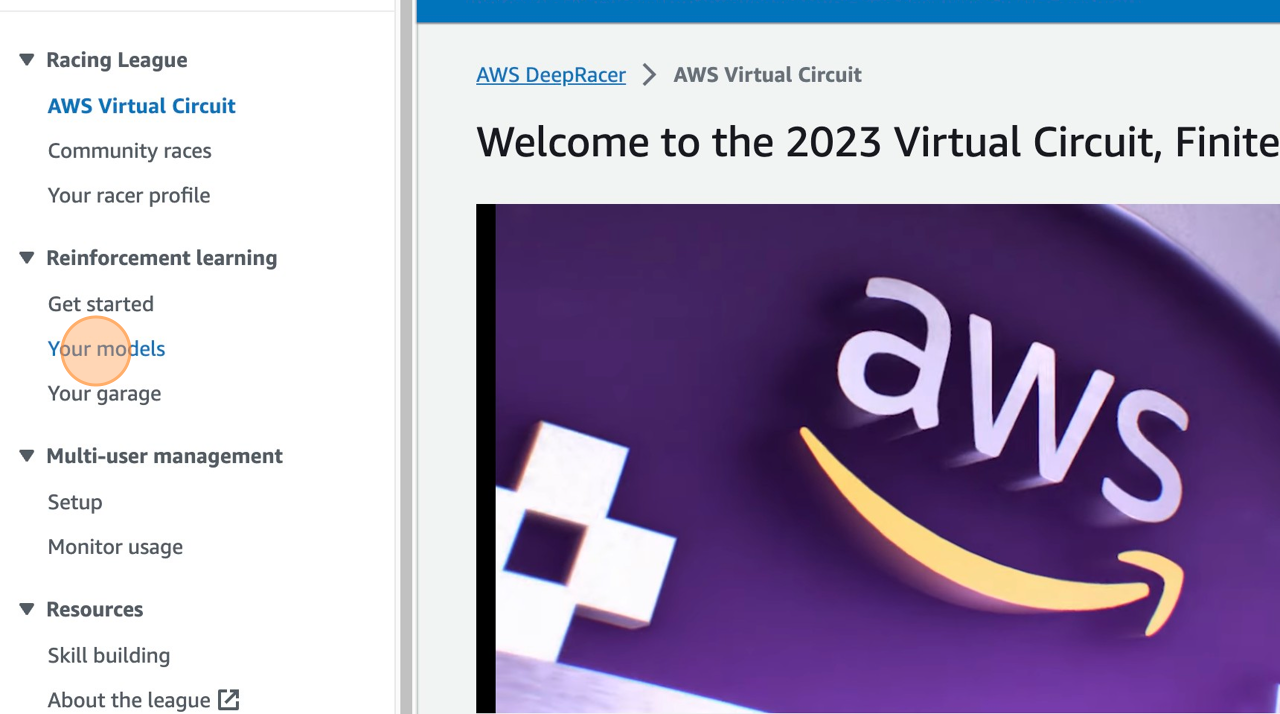

To get started with your first model, click on “Your models”

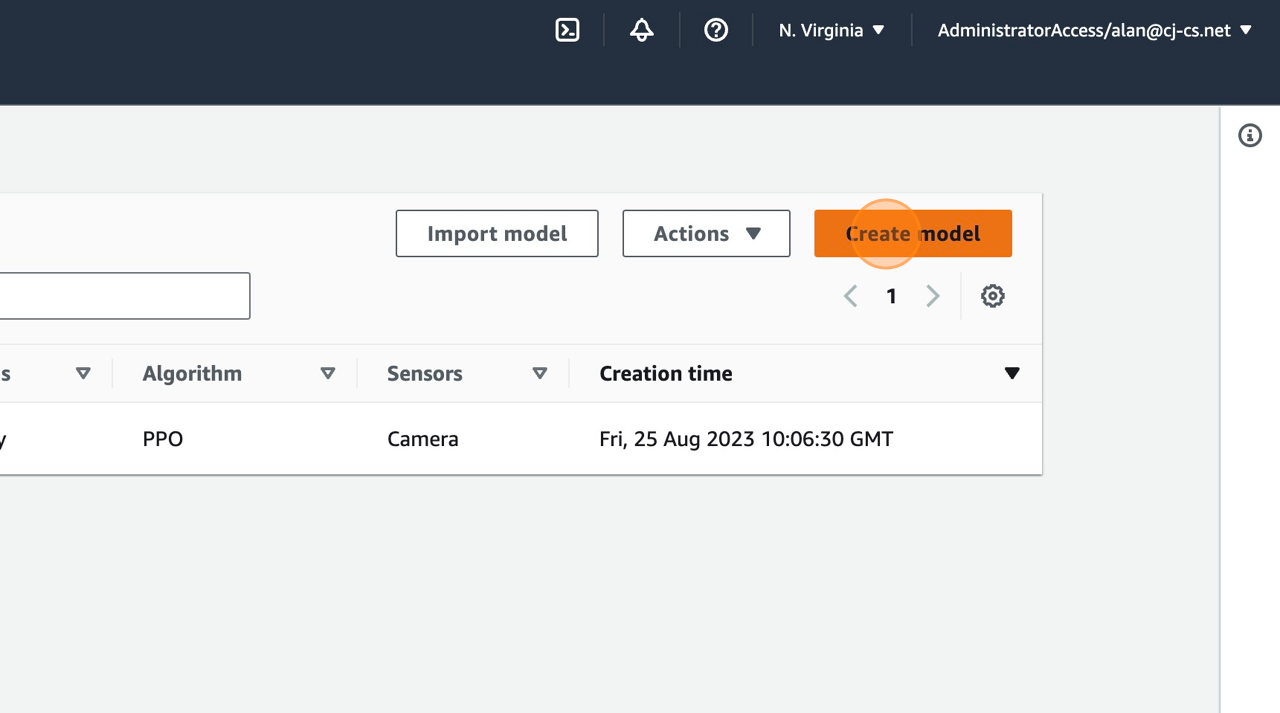

Click on “Create model”

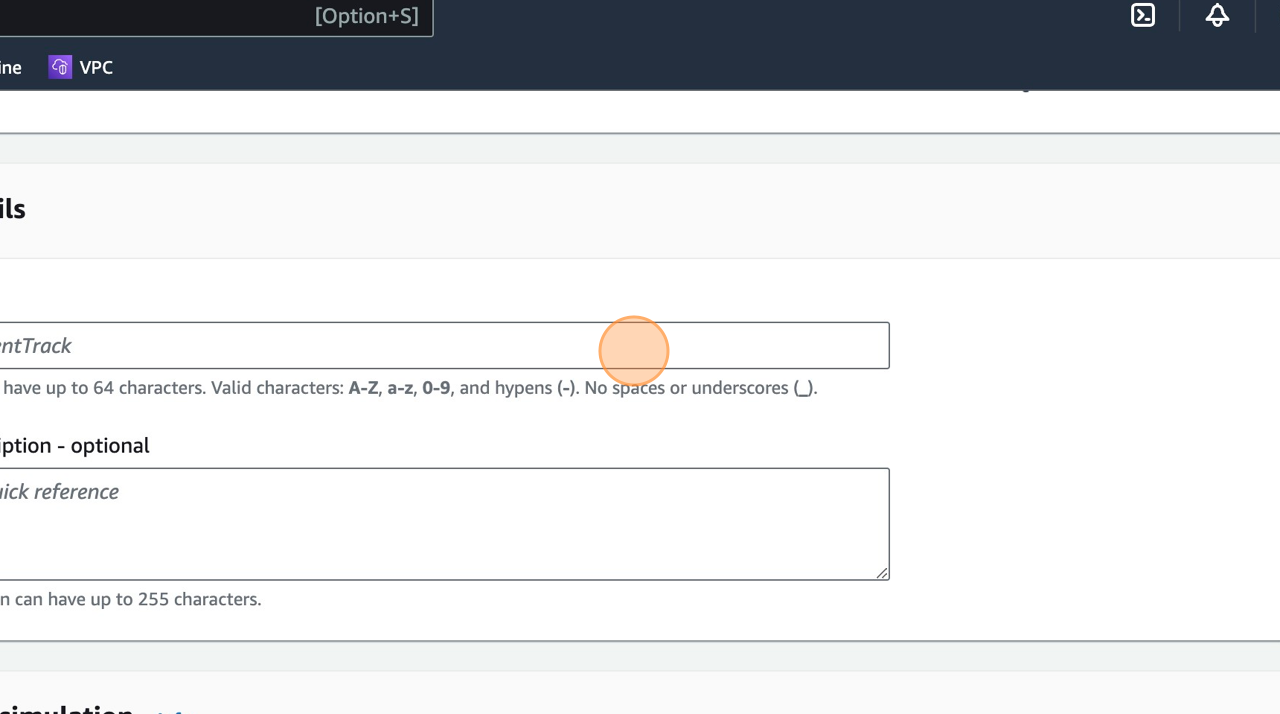

Give your model a really cool name

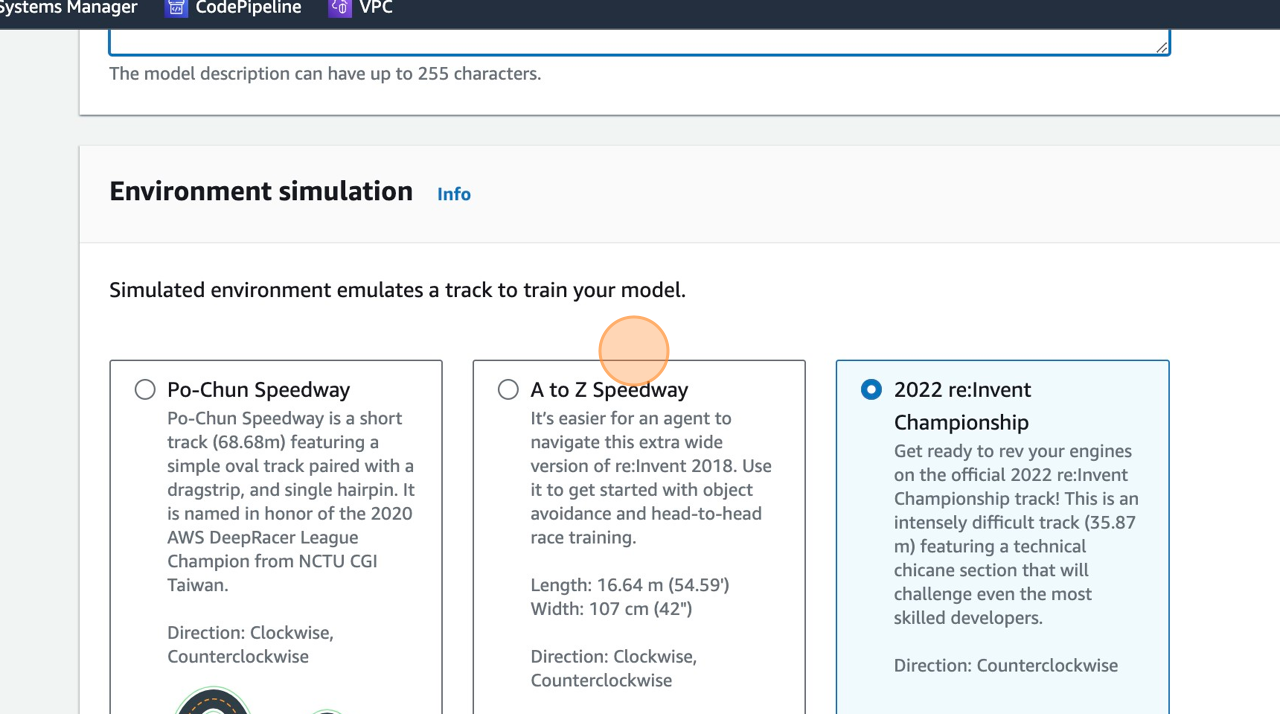

Select your track to train on and click next. In this example I’m attempting to train on A to Z Speedway:

When you are prompted for your Hyperparameters, whilst getting started just click next on the, however, these can be revisited later.

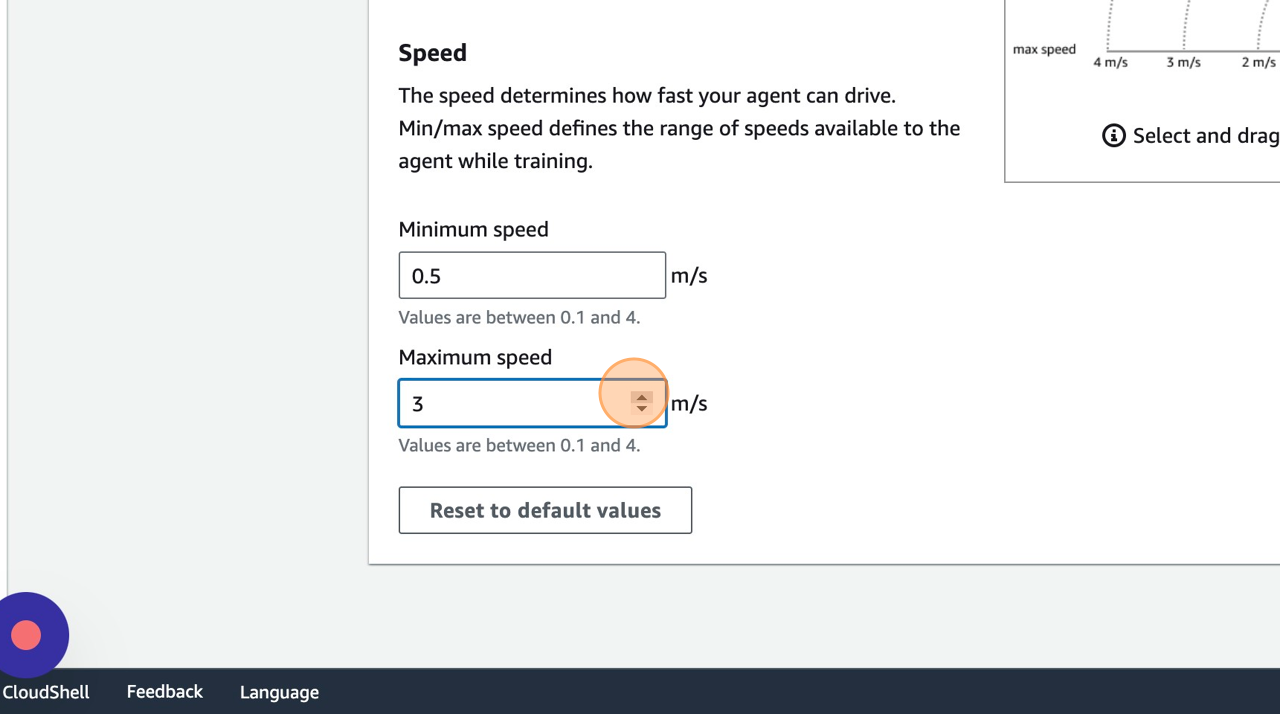

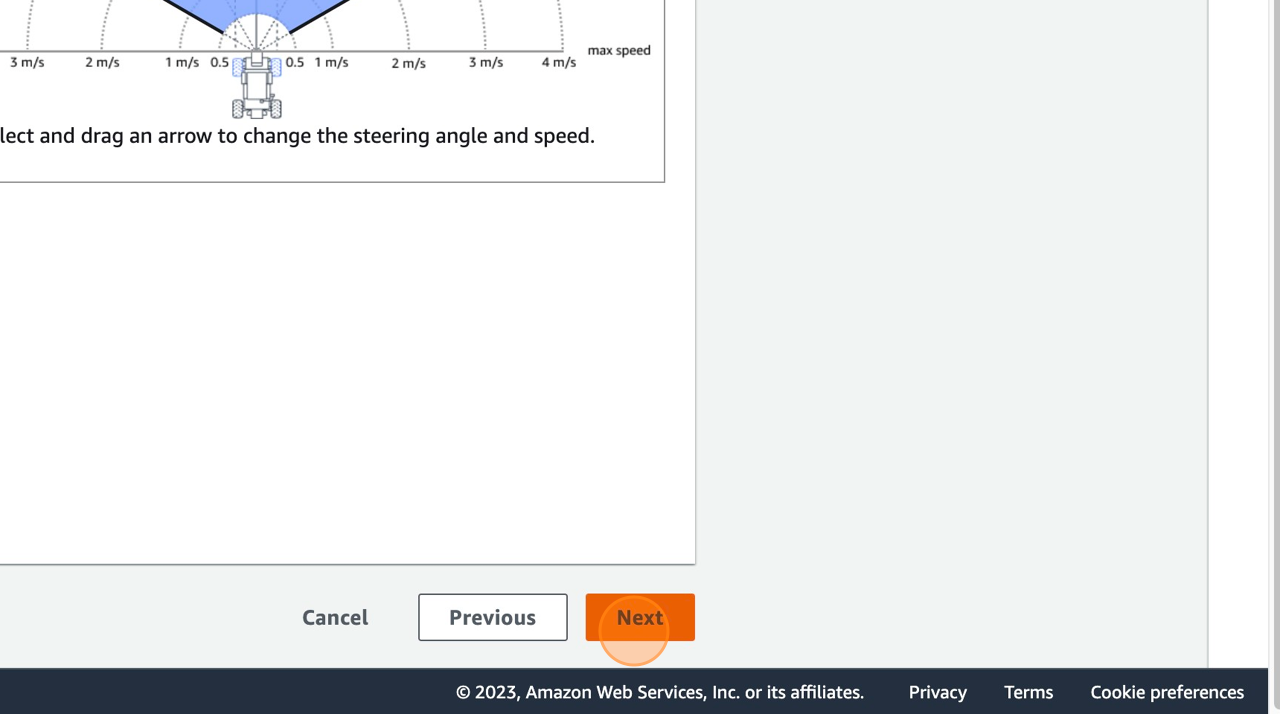

Set up your action space. This is where you will select how fast your car will go and how sharp you want it to turn. In this example, we’re just going to change the speed.

Select your car, at this point you will probably only have the original DeepRacer

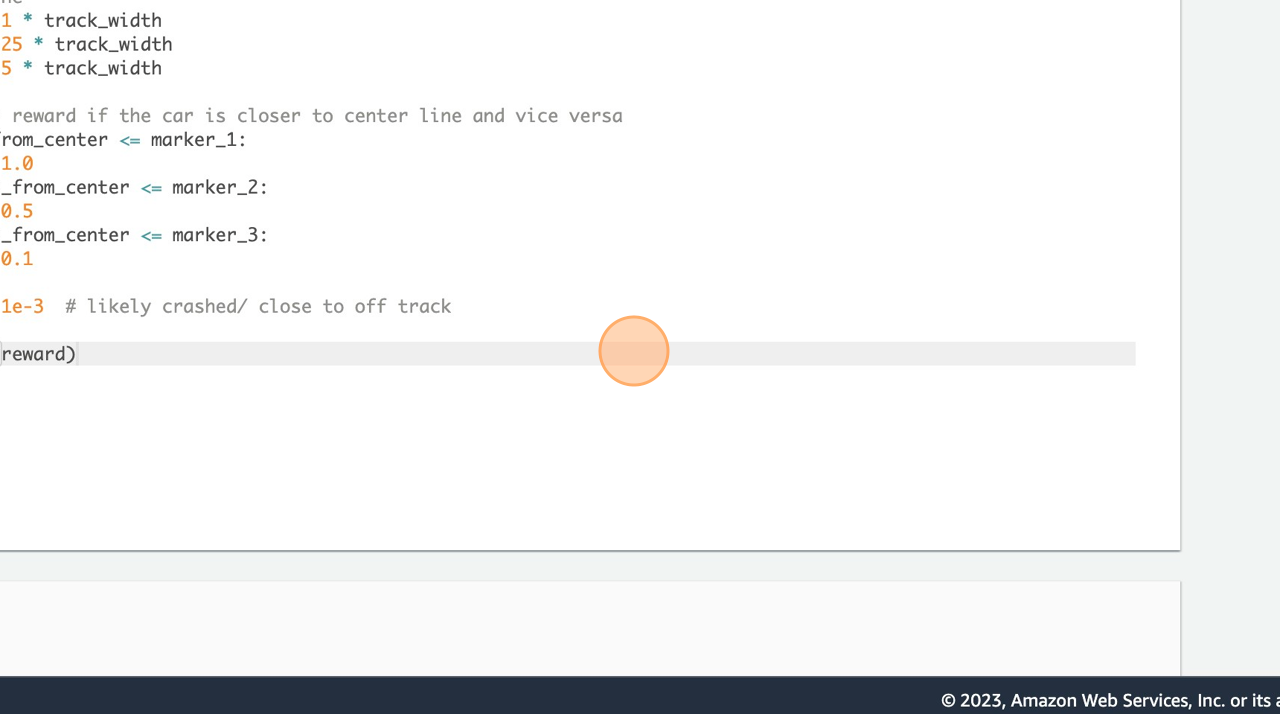

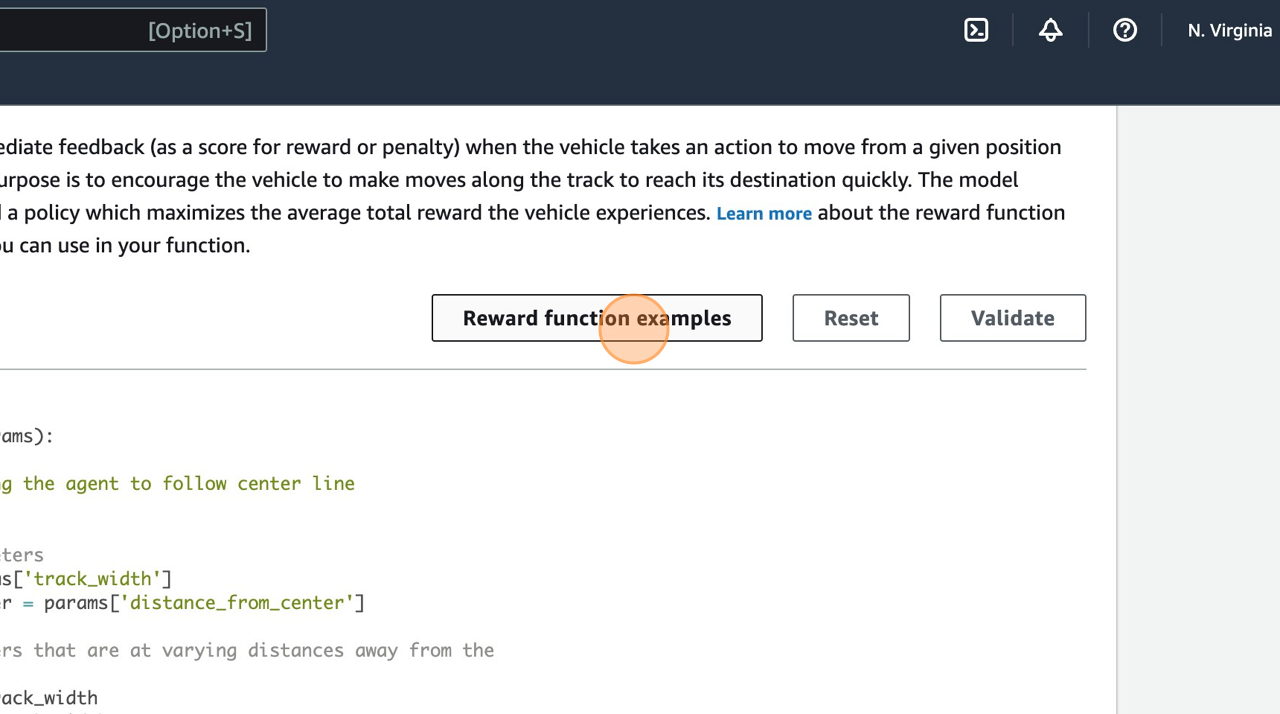

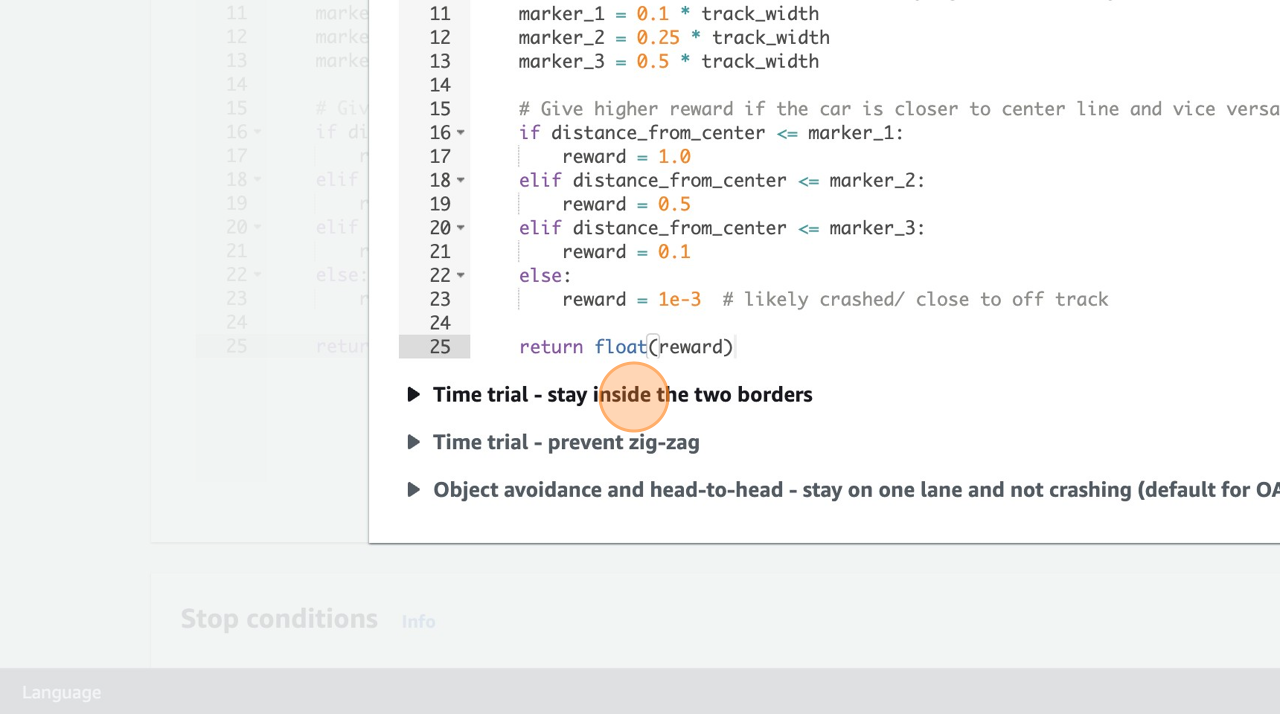

Now you need to write your reward function. This is where the magic happens, this is the code that trains the car to do the right thing and not do the wrong thing.

You can choose from one of the examples in this case to get an idea of how they work.

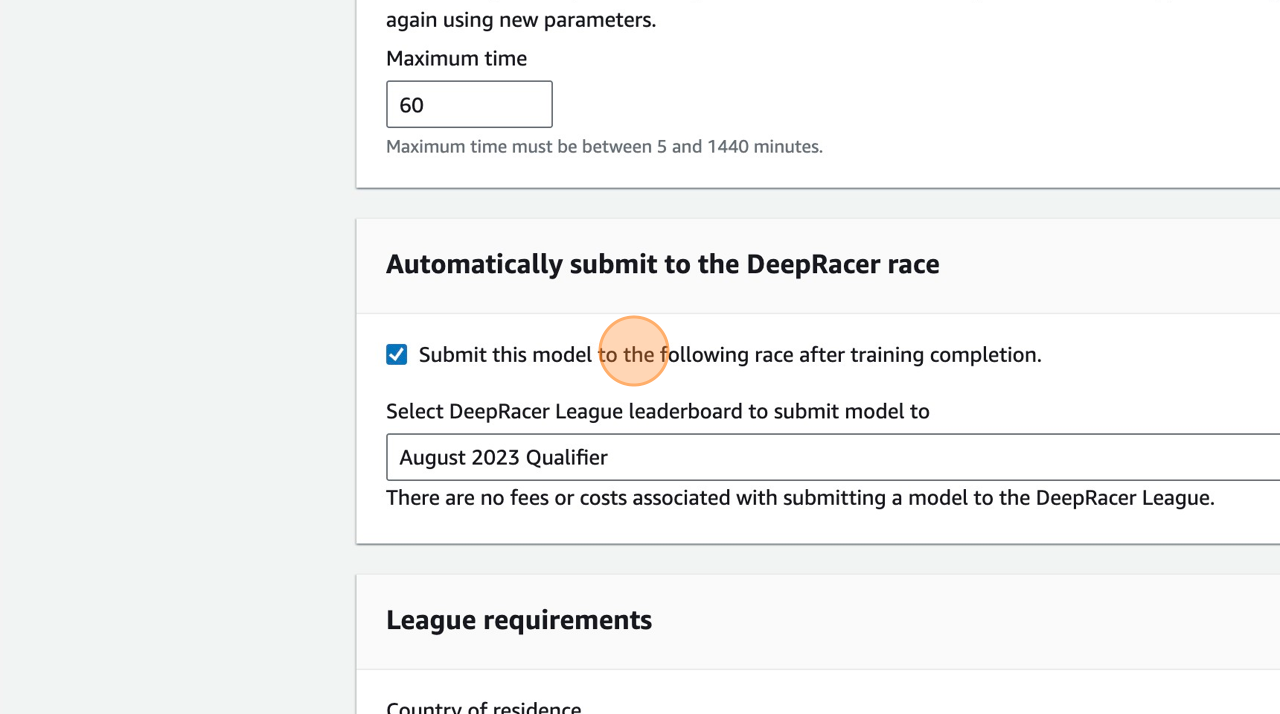

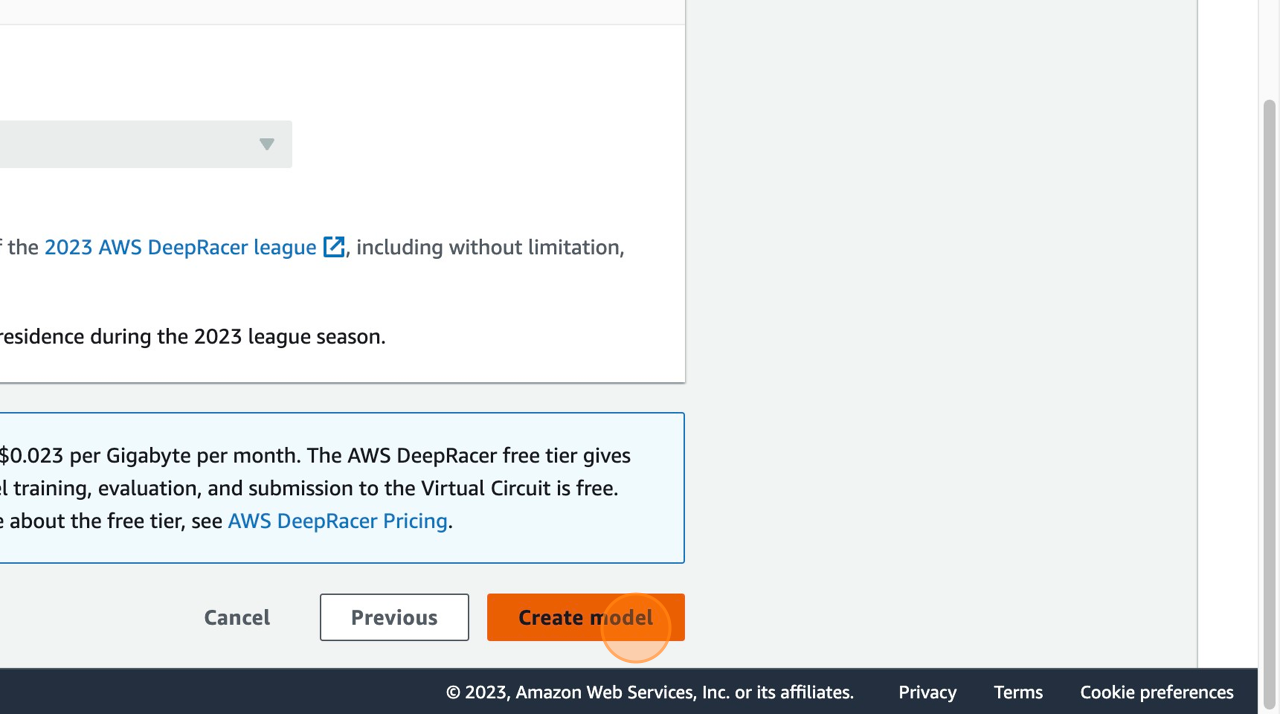

Time to submit our model for training. Make sure you untick the “Automatically submit to the DeepRacer race”, as we’re not training for the global Deepracer league just yet, and then click on Submit.

And now we wait…

During training

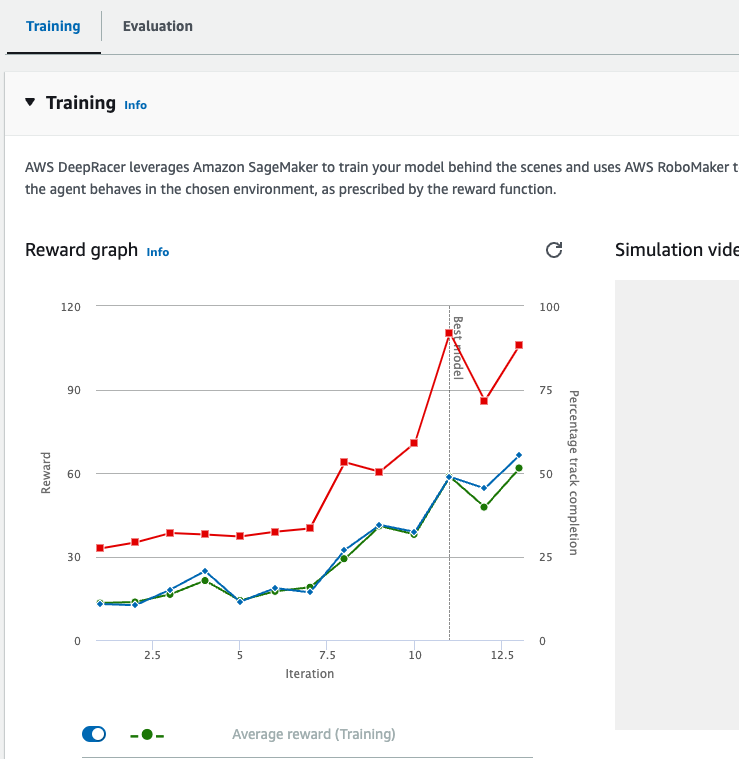

By default, your model will train for approximately 60 minutes. As your model trains you’ll see a graph develop like below.

This is the progress your car is making;

- The green line is the reward value

- The blue and red lines represent on-track completion.

We’re mainly interested in the green and red lines at this point. The higher they go the better chance your model has of getting around the track.

Once your model has trained for 60 minutes you can evaluate your model against the track that you have trained on.

Model Evaluation

Evaluation of your model is important to give you a good indication of how your model will perform on track and in a real race.

Let’s start evaluating our model.

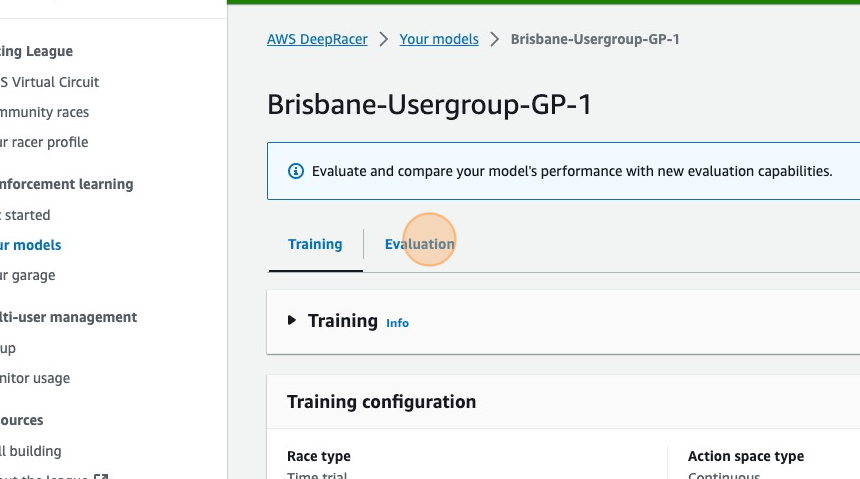

Click on the Evaluation tab in your model.

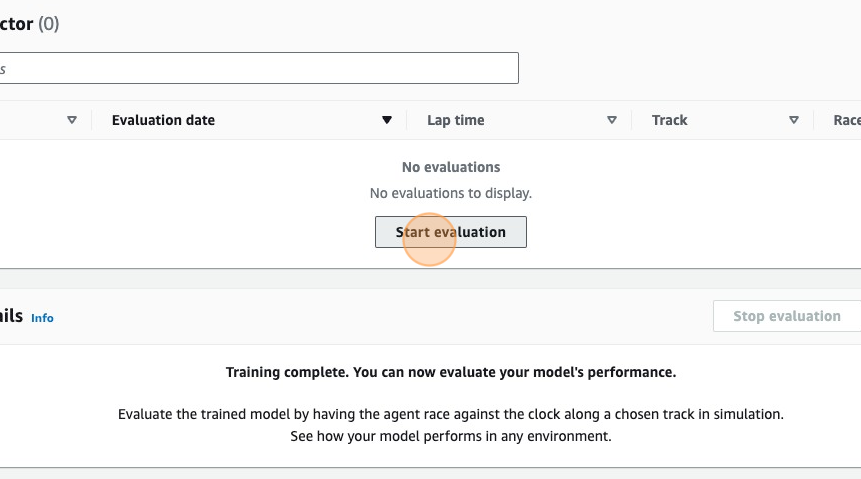

Then Click “Start evaluation”

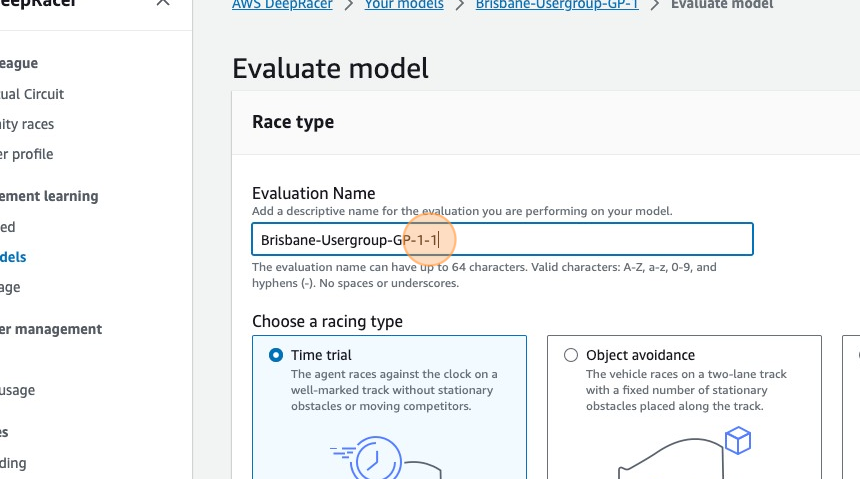

Give your evaluation a common-sense name

Ensure the correct track is selected, should be the same as the one you trained on.

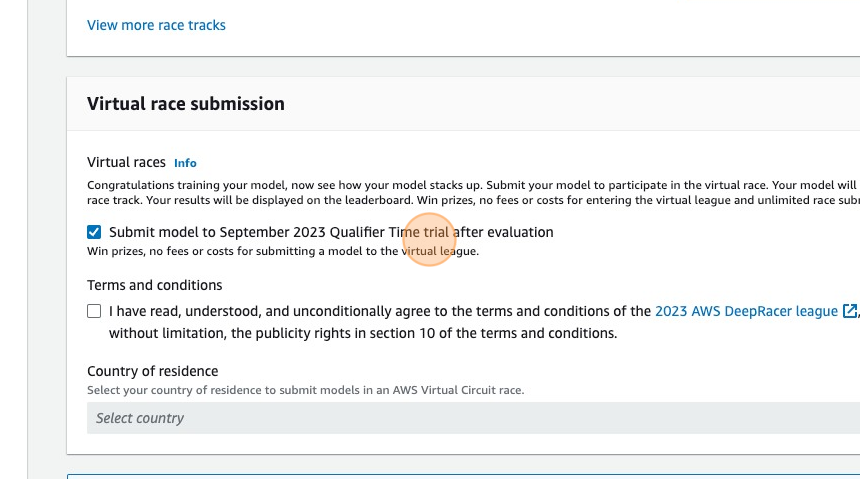

Uncheck “Submit model to September 2023 Qualifier Time trial after evaluation”

Click “Start Evaluation”

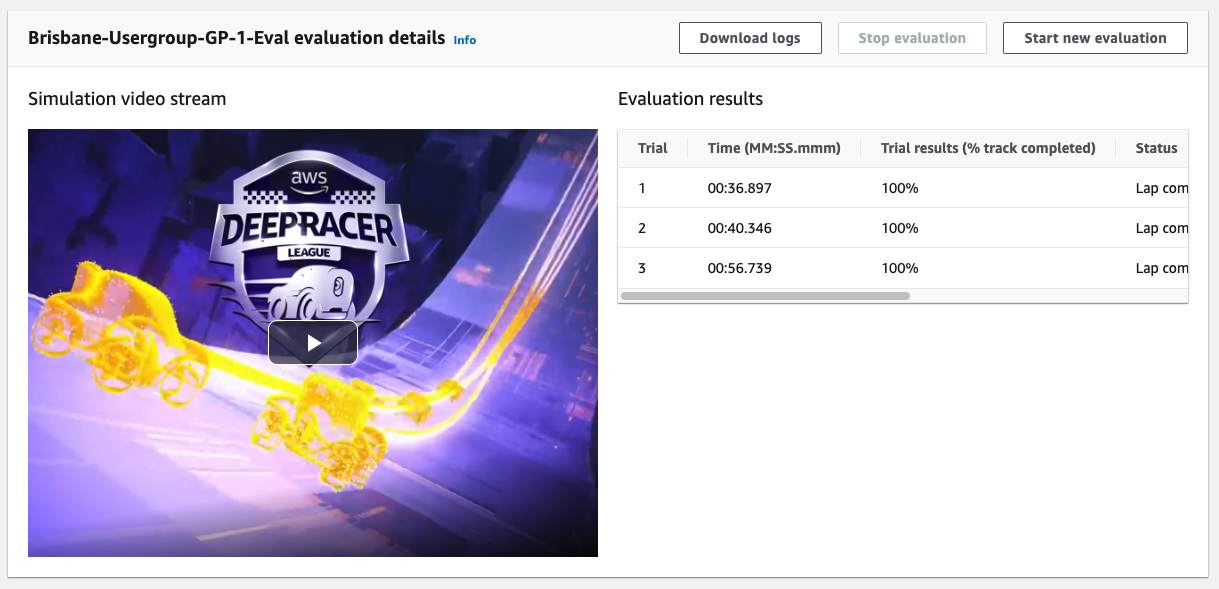

Once the evaluation is complete, you should see something like this.

This tells me I completed 3 laps, and that I did 3 successful laps of the track. More information is available to help you diagnose your model and also a video recording of your car completing its evaluation.

Hopefully you have something similar and a successful lap or two around the track.

Extra hints and tips

Reinforcement learning (RL)

Get acquainted with the basics of reinforcement learning (RL). Understand the concepts of agents, environments, actions, and rewards. DeepRacer uses RL to teach the model to drive autonomously.

Read here for more information on the basics of reinforcement learning.

Experiment with different hyperparameters like batch size, learning rate, and discount factor. These parameters affect how your model learns.

Reward functions

Look at creating your own reward function to train the car on the good, the bad and the ugly.

The below resources can help.

https://docs.aws.amazon.com/deepracer/latest/developerguide/deepracer-reward-function-input.html

https://docs.aws.amazon.com/deepracer/latest/developerguide/deepracer-reward-function-examples.html