“It Works on My Machine”: SDLC Best Practices for the Real World

This post is for anyone who’s spun up something new, got it working locally, and hit a wall when it came time to deploy. Let’s talk about why best practices still matter—especially when you’re building on AWS.

You’ve probably seen it everywhere—developers jumping head-first into the latest programming trend, riding the hype wave of vibe coding, or switching to a new language that’s suddenly hot again. And honestly, there’s nothing wrong with that. Innovation is what keeps our industry fun. But in all that excitement, it’s easy to forget the foundation: solid, repeatable software development practices.

This post is for anyone who’s spun up something new, got it working locally, and hit a wall when it came time to deploy. Let’s talk about why best practices still matter—especially when you’re building on AWS.

What Are SDLC Best Practices?

Software Development Life Cycle (SDLC) best practices are the agreed guardrails that make sure what you build actually makes it out into the world, safely, consistently, and without surprising anyone along the way.

These include:

- Version control

- Continuous Integration/Continuous Deployment (CI/CD)

- Automated testing and security scans

- Repeatable builds and deployments

- Monitoring and rollback strategies

In short: they’re the practices that turn “It works on my machine” into “It works in prod—every time.”

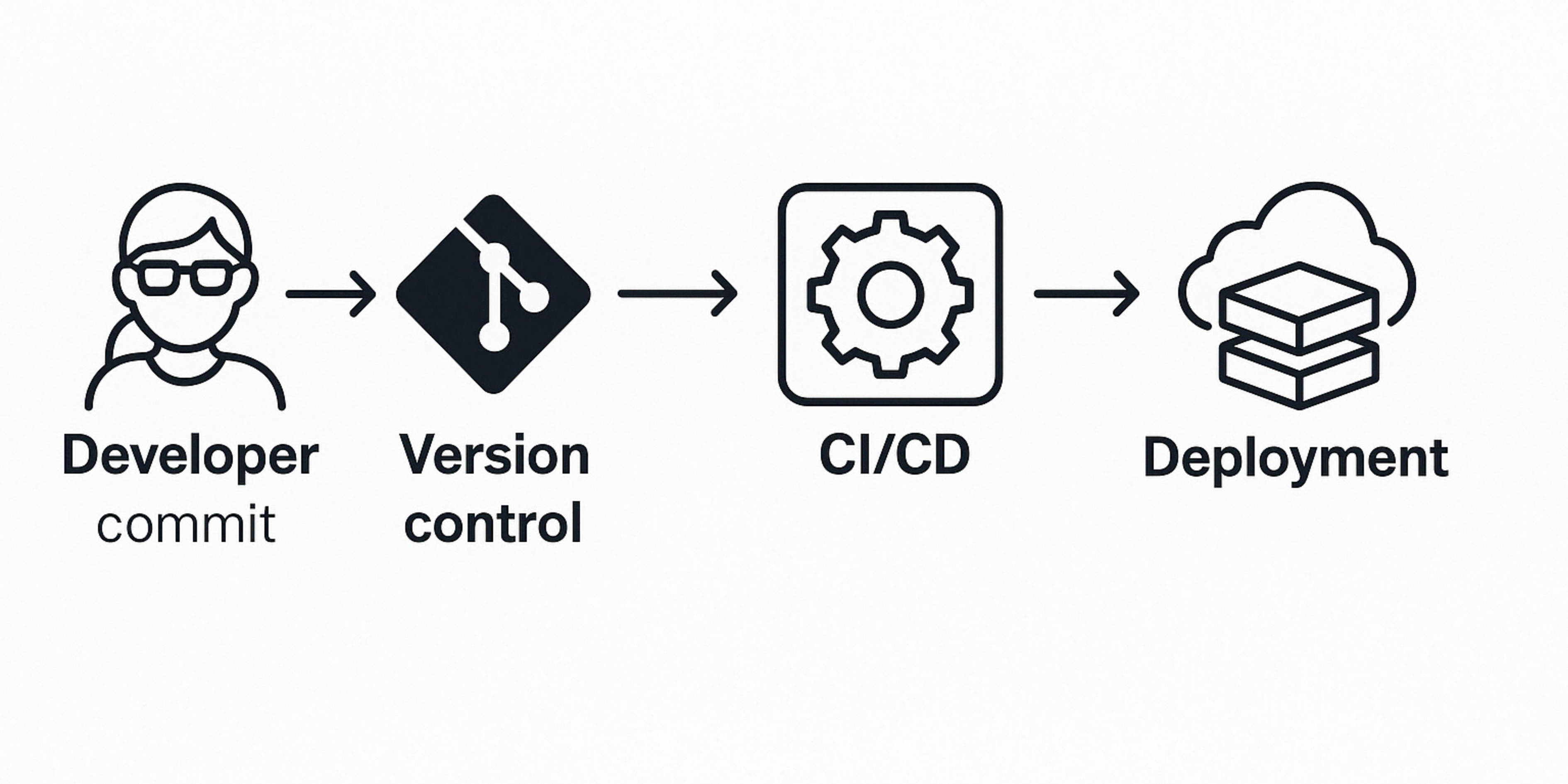

The Case for Version Control (and Doing It Right)

If you’re not using version control, you’re essentially flying blind. But using it well is just as important. Enter GitOps: a model that treats your infrastructure and application code as a single source of truth.

Every change? Tracked.

Every rollback? Simple.

Every deployment? Transparent.

In AWS, you can pair GitHub, GitLab, or Bitbucket with CodePipeline using CodeConnections to automatically trigger builds, tests, and deployments when someone pushes code. No more manual steps. No room for forgetfulness.

“Did you remember to redeploy the Lambda?” - Anyone who’s manually built something and forgot to press the deploy button

We’ve all been there. Someone’s testing a fix that should’ve worked—and it’s not. After 10 minutes of head-scratching, someone drops the dreaded question:

“Did you actually deploy it?”

In environments without automated deployments, these kinds of bugs are common. The fix might exist locally—or even be committed to source control—but unless it’s actually deployed, nothing changes in production.

Automating deployments with tools like AWS CodePipeline removes that uncertainty. Every change runs through the same pipeline, gets tested, and lands in production the same way—every time. No surprises. No human error. No forgotten Lambda updates.

Automate Everything with CI/CD

The real power of AWS CodePipeline and CodeBuild isn’t just automation—it’s consistency.

Once you define your pipeline, everyone on your team gets the same treatment: same linter, same security scans, same build steps. It means less troubleshooting, fewer surprises, and more focus on building features instead of fixing broken deployments.

Top tip: If you’re deploying AWS Lambda functions, automate it. Manual deployments are error-prone, especially when you’re handling environment variables, layers, or complex build steps. Using SAM or the Serverless Framework integrated into CodePipeline makes deployments safe and boring (which is what you want).

SAM: https://aws.amazon.com/serverless/sam/

Serverless: https://github.com/serverless/serverless

Speed Isn’t Just a Luxury—It’s an Advantage

One of the often-overlooked benefits of solid CI/CD implementation is speed—not just in deployment, but in learning. The faster you can deploy, the faster you can identify issues. This tight feedback loop means bugs are caught earlier, fixes are shipped faster, and teams stay in flow.

Improving deployment times can:

- Reduce operational overhead

- Improve developer morale by keeping momentum

- Shorten the time between writing code and discovering issues in production-like environments

Let’s be honest—waiting for builds and manual deploys kills momentum.

Seriously, who likes watching a compiler run? (You’d get more joy watching paint dry—with fewer errors.)

Secure the Pipeline Like You Secure Production

CI/CD pipelines often have the keys to the kingdom—deployment permissions, secrets, IAM roles. If these aren’t protected properly, they become a massive attack surface.

Here’s what you should do:

- Avoid using static access keys in your pipelines

- Use IAM Roles with fine-grained permissions for each stage

- Audit who can trigger deployments, approve changes, or modify pipeline definitions

A secure SDLC isn’t just about the code—it’s about every system involved in delivering that code.

Block or Warn? Picking the Right Gate at the Right Time

Not every test needs to stop your pipeline. But some absolutely should.

A good practice is to classify tests and quality gates into:

- Blocking checks (e.g., high severity vulnerabilities, IAM policy over-permission)

- Advisory checks (e.g., style violations, low-severity bugs)

Deciding what’s critical enough to block a release vs. what can be flagged for future action is part of maturity. If you’re just starting out, it’s fine to begin with notifications—but you should work toward building in automated blocks where it makes sense.

Context matters. A minor issue in an internal tool might be more tolerable than the same issue in a customer-facing API.

Give Builders Feedback When They Need It

The earlier developers get feedback, the more likely they are to fix it. That’s why tools like Amazon Q Developer, integrated directly into IDEs, are so powerful. It offers smart suggestions during development, nudging builders toward better code without interrupting their flow.

Pair that with tools like:

- Amazon CodeGuru Reviewer – for automated code reviews on pull requests or commits

- Amazon Inspector – to scan Lambda functions for known vulnerabilities

This shifts security and code quality left, embedding it into the daily workflow instead of treating it as a post-build compliance hurdle.

Test Findings Are Useless If They Disappear into the Void

Automated testing in a pipeline is a great first step—but don’t let findings vanish into logs or emails. Use your ticketing system (e.g., Jira, ServiceNow) to create traceable issues for bugs or vulnerabilities.

Better yet? Link test failures to documentation or remediation guidance. That way, instead of just telling devs what broke, you help them fix it.

Don’t Trust, Always Verify: Your Dependencies Change Too

Your code might not change—but your dependencies might. Every package in your build could get an update tomorrow that introduces a critical vulnerability.

That’s why regular package scanning matters.

Use tools like:

- Amazon Inspector

- OWASP Dependency-Check

- Snyk CLI for containerized workloads

Automate these scans and trigger alerts when a new vulnerability affects a package you already use.

Threat Modeling: Teach Teams to Think Like Adversaries

One of the most powerful things you can do for your engineering team isn’t to throw more scanners at them—it’s to teach them threat modeling.

By learning how to identify risks in their own systems, developers:

- Make smarter architecture decisions

- Write more secure code upfront

- Rely less on “security gates” and more on internalised security thinking

This reduces friction between teams and avoids costly rework after a final review flags something avoidable.

Get Hands-On: Learn by Doing with Game Days and Jams

Finally, theory only goes so far. If you want your team to feel the impact of good (and bad) SDLC practices, run an AWS GameDay or internal security jam.

These team-based, gamified events simulate real-world challenges:

- Outage recovery

- Misconfigured pipelines

- Vulnerability response

- Chaos engineering

Game days create muscle memory, build cross-team relationships, and level up your team’s confidence in handling unexpected scenarios.

This Isn’t Just for Big Teams

You might be thinking, “This sounds great for large dev teams with dedicated DevOps people—but I’m just building solo.” Here’s the thing: these practices matter even more when you’re on your own.

For solo builders, startups, and side projects:

- Automation saves your future self from debugging broken deploys at 11pm

- Security scanning protects your users—and your reputation

- CI/CD pipelines become your quiet co-pilot, running checks while you focus on features

- Version control with GitOps means you’ll never lose that “one working version” again

For teams:

- These practices create alignment, catch issues early, and build trust between devs, security, and operations.

- They reduce finger-pointing and make everyone’s life easier when something goes wrong (because it will).

Whether you’re writing code for a side project, a startup MVP, or a Fortune 500 product—good SDLC is a force multiplier. Start small. Automate what you can. And build habits now that scale later.

Final Thoughts: Build the Pipeline You’d Be Proud to Hand Off

In a world where tech stacks change weekly and everyone’s vibing on the next big thing, the best engineers are the ones who know how to move fast safely.

That’s what a good SDLC gives you.

It’s not just about having the best code—it’s about having a system that makes any code better, more secure, and easier to ship. And if you can build that system on AWS with the tools already at your fingertips? Even better.

“Le mieux est l’ennemi du bien” - Voltaire

Loosley translates to, “Perfect is the enemy of good”.

You don’t need to be perfect. Just consistent, automated, and always improving.

Disclaimer(s)

- The content in this post is for informational purposes only and should not be considered as a substitute for professional advice.

- This post was also deployed using a CI/CD pipeline, which includes automated testing and deployment steps.

- As I’m lazy with image creation and don’t have the time to create images for every post, I’ve used AI generated images in this post.